The modern “built-in AI” systems started revolutionizing the life and business landscape altogether. The systems like Google’s BERT can think and act like how humans think, write and talk. BERT eventually integrated to Google’ search engine which is guessing and giving us suggestions of search ideas when we dig in there. But, do you know that, these systems have a problem which is globally genuine, human and to be avoided?

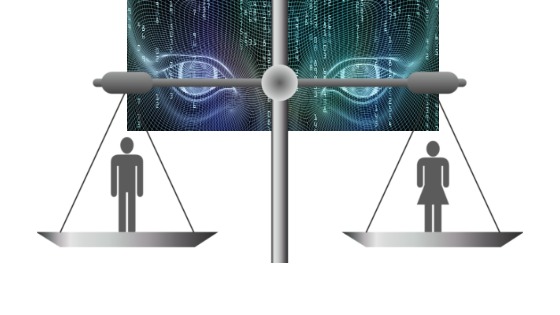

They also possess gender bias!

They discriminate against women by the way by which they are evolved, designed and more than anything else “taught”.

The Natural Language Processors (NLPs) and AI systems learn from the loads of digitized information, ranging from old books, Wikipedia entries, social media posts and news articles. Dr Robert Munro, a researcher an computer scientist, found that, the present times AI systems exhibit significant bias against woman (https://bit.ly/33ESIYj) . An example he cites is, “hers” a possessive pronoun, is not recognized by even the most widely used NLPs including Amazon Comprehend, Google NL API etc.

While human brain is strictly trained on the usage of pronouns using its correct grammatical usage. But the AI systems eventually miss this part just because they are trained from the existing online data set which is naturally imbalanced. AI systems tend to adapt a more “populistic” usages which are eventually discriminating.

Dr Munro mentions following reasons for this aberration.

- The algorithms are trained on data with gender imbalances. The source of data on which these Ai systems are trained are created by human with all default defects. Even in the data sets like universal dependencies, the masculine pronouns are 2-4 times more than that of corresponding feminine ones. That is, these systems learn wrong from these basic error; just like a kid learn wrong things from its parents.

- The algorithms are trained with least level of details. The news articles, which are also base of training for AI systems, rarely use the Independent Possessive pronouns. For example, instead of writing “hers was fast”, a journalist will favor “her car was fast”, even if it was obvious that “hers” referred to a car.

- The available data sets do not correctly label the independent possessive pronouns.

Fixing some areas in a specific data set will not end this issue as the systems which are online now, like BERT hugely depend on the large amount of data and the recurrence of word usage in that. Researchers and companies like Google, Amazon etc have a lot of work to do to fix these things and ensure that the AI based systems are “gender neutral” and “non-discriminating”. It’s not long ago, we had the debate and controversy over inaccuracy in facial recognition due to subject’s skin color ; the root of which is also the same thing. The “base data set” and “prevailing discriminating thought process”

Written by : Sethunath U N

Courtesy:

https://bit.ly/33ESIYj (Dr Robert Munro)

Images : Careergirl.com, futureoflife.org